Campus to Canton Series: Which Players Hit in the NFL?

Here at DLF, we strive to evolve and grow with the game of dynasty fantasy football. In that spirit, we have started a new article series, introducing the latest dynasty craze – Campus to Canton leagues. My goal is to start by focusing on the basics while establishing an understanding of how everything works. As we progress through the series, I aim to dive into strategies, player values, buys and sells, and more! If you’re new to C2C and missed the earlier parts of the series, make sure to go back and check them out, starting with part one.

Welcome back! If you’ve been keeping up with this series, you know that we’ve been focused on freshmen hit rates for the past couple of articles. Along the way, we’ve gained some insight into increasing our odds of hitting on a freshman player, but I felt like there’s still more to learn. While the previous articles focused on the recruits themselves, this time we’re turning our attention to the NFL. What better way to figure out who hits in the NFL, than by looking at who has hit in the NFL?

In order to do that, I utilized @FF_Spaceman’s NFL Database, which can be found on his Patreon account. I only took players who have had a top-24 fantasy football season in the past seven years. From that group of players, I narrowed it down to players who were drafted in or after the 2016 season, so we can look at the same time frame as the last article (2013 recruit class=2016 draft class). What we are left with is 128 players who have had at least a top-24 fantasy season, since being drafted. 77 of those 128 hit a top-12 and 42 hit a top-five season.

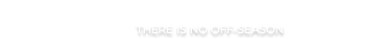

Remember in the last article when I told you not to draft highly-rated tight ends in your supplementals? Only one player out of 27 total who has hit a top-24 season was a five-star recruit. The majority of TEs who have hit were three-star or lower recruits, going into college.

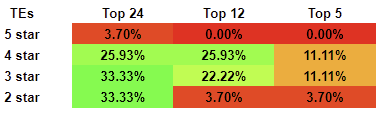

The five-star players hit at a better rate for the WRs, but we still see a large discrepancy between the different levels. Only six of the 36 WRs who have hit a top-24 season were five-star recruits. Only one of those players (Calvin Ridley) has gone on to have a top-five fantasy season. Four-star recruits have had 14 hits in the same timeframe, so that is a little more promising, but the three-star and below recruits combine for 16 out of 36 hits.

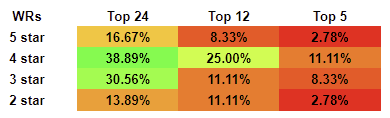

The hit rates for the high–end quarterbacks are pretty bad. Combining the four and five-star QBs, we end up with 7 out of 21 total hits, two less than the three-star prospects by themselves. While two two-star QBs have hit in this timeframe, they don’t seem to be very good bets for a long and productive career.

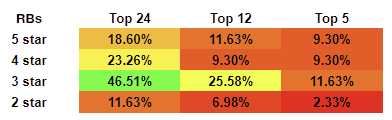

We see the highest hit rate of any group of players from the RBs, but once again the three-star recruits have two more hits than the four and five-star players combined.

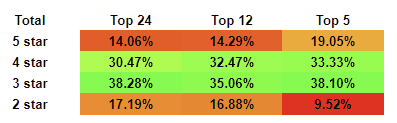

Combine all of the players together and it’s clear and obvious that more players that have hit a top-24 season come from three-star or lower college recruits. 71 of the 128 total players come from that grouping. The hit rates don’t even change much as we progress into the top-12 and even the top-five players.

My goal throughout the past three articles has been to show that the hit rates for the top recruits aren’t as good as many have been led to believe. We’ve looked at the level of the recruit scores, positions, and conferences to find who hits. While we are able to find better success by drafting certain positions from certain conferences, one thing remains clear; It doesn’t matter what a player’s recruit rank is going into college. Yes, there will be plenty of hits from the top-end recruits (about 1 in 5), but there will be even more hits from the lower-ranked players. My research for these articles hasn’t focused on what NFL teams look for when drafting players, but in previous research, I have proven that generally speaking, the most productive college players turn into the most productive NFL players.

While we have no true way of knowing who will hit before they see the field, there are ways for us to increase our odds. I don’t want the takeaway from this series to be that you should never draft freshmen. There’s a time and a place where drafting them makes sense. What I would like to see is C2C and devy players putting more of an emphasis on the players who have already broken out. If those players have already been picked up via waivers then, by all means, keep drafting freshmen. However, if after drafting a freshman player we notice they aren’t producing to the expected levels, be quick to move off of them while they still retain their high value.

There’s so much strategy involved in C2C drafts. Hopefully, all of this research will help you make wiser decisions in your drafts, increasing your odds of hitting. As always, if you need a second opinion during a draft or trade negotiation, feel free to reach out to me on Twitter. See you in a couple of weeks, as we continue to dive into Campus to Canton leagues.

- 2024 Dynasty Fantasy Football Rookie Drafts: A View from the 1.11 - April 13, 2024

- John Arrington: Dynasty Fantasy Football Rankings Explained - April 5, 2024

- 2024 Dynasty Fantasy Football Rookie Profile: Blake Corum, RB Michigan - April 3, 2024